Benchmarking low-level I/O: C, C++, Rust, Golang, Java, Python

- The Tech Platform

- Jul 19, 2021

- 4 min read

Benchmarking TCP Proxies is probably the simplest case. There is no data processing, only handling incoming/outgoing connections and relaying raw byte data. It’s nearly impossible for a micro-service to be faster than a TCP proxy because it cannot do less than that. It can only do more. Any other functionality is built on top of that — parsing, validating, traversing, packing, computing, etc.

The following solutions are being compared:

HAProxy— in TCP-proxy mode. To compare to a mature solution written in C: http://www.haproxy.org/

draft-http-tunnel — a simple C++ solution with very basic functionality (trantor) (running in TCP mode): https://github.com/cmello/draft-http-tunnel/ (thanks to Cesar Mello, who coded it to make this benchmark possible).

http-tunnel — a simple HTTP-tunnel/TCP-proxy written in Rust (tokio) (running in TCP mode): https://github.com/xnuter/http-tunnel/ (you can read more about it here).

tcp-proxy — a Golang solution: https://github.com/jpillora/go-tcp-proxy

NetCrusher — a Java solution (Java NIO). Benchmarked on JDK 11, with G1: https://github.com/NetCrusherOrg/NetCrusher-java/

pproxy — a Python solution based on asyncio (running in TCP Proxy mode): https://pypi.org/project/pproxy/

All of the solutions above use Non-blocking I/O. In my previous post, I tried to convince the reader that it’s the best way to handle network communication, if you need highly available services with low-latency and large throughput.

A quick note — I tried to pick the best solutions in Golang, Java, and Python, but if you know of better alternatives, feel free to reach out to me.

The actual backend is Nginx, which is configured to serve 10kb of data in HTTP mode.

Benchmark results are split into two groups:

Baseline, C, C++, Rust —high-performance languages.

Rust, Golang, Java, Python —memory-safe languages.

Yep, Rust belongs to both worlds.

Brief description of the methodology

Two cores allocated for TCP Proxies (using cpuset).

Two cores allocated for the backend (Nginx).

Request rate starts at 10k, ramping up to 25k requests per second (rps).

Connections being reused for 50 requests (10kb each request).

Benchmarks ran on the same VM to avoid any network noise.

The VM instance type is compute optimized (exclusively owns all allocated CPUs) to avoid “noisy neighbors” issues.

The latency measurement resolution is microsecond (µs).

We are going to compare the following statistics:

Percentiles (from p50 to p99) — key statistics.

Outliers (p99.9 and p99.99) — critical for components of large distributed systems (learn more).

Maximum latency — the worst case should never be overlooked.

Trimmed mean tm99.9 — the mean without the best/worst 0.1%, to capture the central tendency (without outliers).

Standard deviation — to assess the stability of the latency.

Feel free to learn more about the methodology and why these stats were chosen. To collect data, I used perf-gauge.

Okay, let’s talk about the results!

Comparing high-performance languages: C, C++, Rust

I’d often heard that Rust is on par with C/C++ in terms of performance. Let’s see what “on par” exactly means for handling network I/O.

See the four graphs below in the following order: Baseline, C, C++, Rust:

Green — p50, Yellow — p90, Blue— p99 in µs (on the left). Orange — rate (on the right)

Below, you’ll see how many microseconds are added on top of the backend for each statistic. The numbers below are averages of the interval at the max request rate (the ramp-up is not included):

Overhead, µs

In relative terms (overhead as the percentage of the baseline):

Overhead in % of the baseline statistic

Interestingly, while the proxy written in C++ is slightly faster than both HAProxy and Rust at the p99.9 level, it is worse at p99.99 and the max. However, it’s probably a property of the implementation, which is very basic here (and implemented via callbacks, not handling futures).

Also, CPU and Memory consumption was measured. You can see those here.

In conclusion, all three TCP proxies, written in C, C++, and Rust, showed similar performance: lean and stable.

Comparing memory-safe languages: Rust, Golang, Java, Python

Now, let’s compare memory-safe languages. Unfortunately, Java and Python’s solutions could not handle 25,000 rps on just two cores, so Java was benchmarked at 15,000 rps and Python at 10,000 rps.

See four graphs below in the following order: Rust, Golang, Java, Python.

Green — p50, Yellow — p90, Blue— p99 in µs (on the left). Orange — rate (on the right)

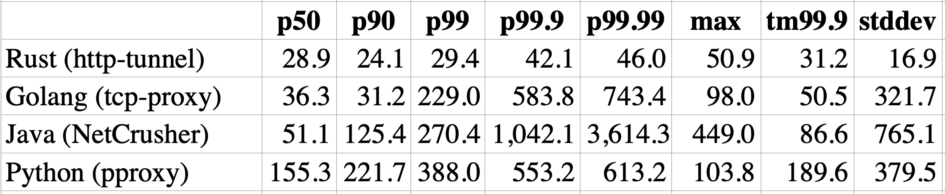

Well, now we see a drastic difference. What seemed “noisy” on the previous graph, at the new scale, seems quite stable for Rust. Also, notice a cold-start spike for Java. The numbers below are averages of the interval at the max request rate (the ramp-up is not included):

Overhead, µs

As you can see, Golang is somewhat comparable at the p50/p90 level. However, the difference grows dramatically for higher percentiles, which is reflected by the standard deviation. But look at the Java numbers!

It’s worth looking at the outlier (p99.9 and p99.99) percentiles. It’s easy to tell that the difference with Rust is dramatic:

Green — p99.9, Blue — p99.99 in µs (on the left). Orange — rate (on the right)

In relative terms (the percentage to the baseline Nginx):

Overhead in % of the baseline statistic

In conclusion, Rust has a much lower latency variance than Golang, Python, and especially Java. Golang is comparable at the p50/p90 latency level with Rust.

Maximum throughput

Another interesting question — what is the maximum request rate that each proxy can handle? Again, you can read the full benchmark for more info, but for now, here’s a brief summary.

Nginx was able to handle a little over 60,000 rps. If we put a TCP proxy between the client and the backend, it will decrease the throughput. As you can see below, C, C++, Rust, and Golang achieved about 70%–80% of what Nginx served directly, while Java and Python performed worse:

Left axis: latency overhead. Right axis: throughput

The blue line is tail latency (Y-axis on the left) — the lower, the better.

The grey bars are throughput (Y-axis on the right) — the higher, the better.

Conclusion

These benchmarks are not comprehensive, and the goal was to compare bare-bones I/O of different languages.

However, along with Benchmarks Game and TechEmpower they show that Rust might be a better alternative to Golang, Java, or Python if predictable performance is crucial for your service. Also, before starting to write a new service in C or C++, it’s worth considering Rust. Because it’s as performant as C/C++, and on top of that:

Memory-safe

Data race-free

Easy to write expressive code.

In many ways it’s as accessible and flexible as Python.

It forces engineers to develop an up-front design and understanding of data ownership before launching it in production.

It has a fantastic community and a vast library of components.

Those who have tried it, love it!

Source: Medium ; Eugene Retunsky

The Tech Platform

Comments