What is Load balancing?

- The Tech Platform

- Nov 24, 2020

- 3 min read

Load balancing is a technique used to distribute workloads uniformly across servers or other compute resources to optimize network efficiency, reliability and capacity. Load balancing is performed by an appliance -- either physical or virtual -- that identifies in real time which server in a pool can best meet a given client request, while ensuring heavy network traffic doesn't unduly overwhelm a single server.

In addition to maximizing network capacity and performance, load balancing provides failover. If one server fails, a load balancer immediately redirects its workloads to a backup server, thus mitigating the impact on end users.

Load balancing is usually categorized as supporting either Layer 4 or Layer 7. Layer 4 load balancers distribute traffic based on transport data, such as IP addresses and Transmission Control Protocol (TCP) port numbers. Layer 7 load-balancing devices make routing decisions based on application-level characteristics that include HTTP header information and the actual contents of the message, such as URLs and cookies. Layer 7 load balancers are more common, but Layer 4 load balancers remain popular, particularly in edge deployments.

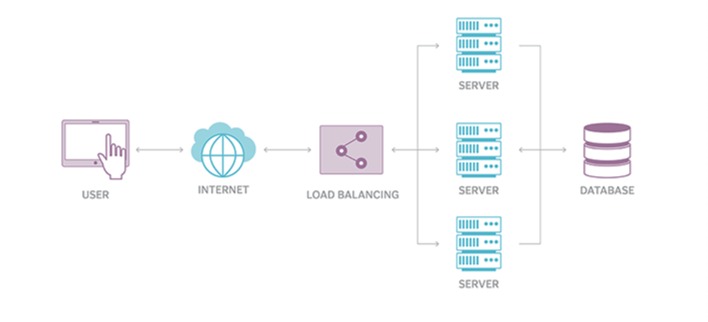

How load balancing works

Load balancers handle incoming requests from users for information and other services. They sit between the servers that handle those requests and the internet. Once a request is received, the load balancer first determines which server in a pool is available and online and then routes the request to that server. During times of heavy loads, a load balancer can dynamically add servers in response to spikes in traffic.

Conversely, they can drop servers if demand is low.

A load balancer can be a physical appliance, a software instance or a combination of both. Traditionally, vendors have loaded proprietary software onto dedicated hardware and sold them to users as stand-alone appliances -- usually in pairs, to provide failover if one goes down. Growing networks require purchasing additional and/or bigger appliances.

In contrast, software load balancing runs on virtual machines (VMs) or white box servers, most likely as a function of an application delivery controller (ADC). ADCs typically offer additional features, like caching, compression, traffic shaping, etc. Popular in cloud environments, virtual load balancing can offer a high degree of flexibility -- for example, enabling users to automatically scale up or down to mirror traffic spikes or decreased network activity.

Load balancer

Load-balancing methods

Load-balancing algorithms determine which servers receive specific incoming client requests. Standard methods are as follows:

Least Connection Method — This method directs traffic to the server with the fewest active connections. This approach is quite useful when there are a large number of persistent client connections which are unevenly distributed between the servers.

Least Response Time Method — This algorithm directs traffic to the server with the fewest active connections and the lowest average response time.

Least Bandwidth Method - This method selects the server that is currently serving the least amount of traffic measured in megabits per second (Mbps).

Round Robin Method — This method cycles through a list of servers and sends each new request to the next server. When it reaches the end of the list, it starts over at the beginning. It is most useful when the servers are of equal specification and there are not many persistent connections.

Weighted Round Robin Method — The weighted round-robin scheduling is designed to better handle servers with different processing capacities. Each server is assigned a weight (an integer value that indicates the processing capacity). Servers with higher weights receive new connections before those with less weights and servers with higher weights get more connections than those with less weights.

IP Hash — Under this method, a hash of the IP address of the client is calculated to redirect the request to a server.

Formulas can vary significantly in sophistication and complexity. Weighted load-balancing algorithms, for example, also take into account server hierarchies -- with preferred, high-capacity servers receiving more traffic than those assigned lower weights.

Benefits of Load Balancing

Users experience faster, uninterrupted service. Users won’t have to wait for a single struggling server to finish its previous tasks. Instead, their requests are immediately passed on to a more readily available resource.

Service providers experience less downtime and higher throughput. Even a full server failure won’t affect the end user experience as the load balancer will simply route around it to a healthy server.

Load balancing makes it easier for system administrators to handle incoming requests while decreasing wait time for users.

Smart load balancers provide benefits like predictive analytics that determine traffic bottlenecks before they happen. As a result, the smart load balancer gives an organization actionable insights. These are key to automation and can help drive business decisions.

System administrators experience fewer failed or stressed components. Instead of a single device performing a lot of work, load balancing has several devices perform a little bit of work.

Source: Tech Target, Wikipedia

The Tech Platform

コメント