What is Kubernetes?

- The Tech Platform

- Sep 19, 2020

- 6 min read

Updated: Feb 16, 2024

Kubernetes, often abbreviated as k8s or "kube," is an open-source container orchestration platform designed to streamline the deployment, management, and scaling of containerized applications. By clustering together groups of hosts running Linux® containers, Kubernetes simplifies the management of these clusters, enabling efficient operation across various environments, including on-premise, public, private, or hybrid clouds. This versatility makes Kubernetes an ideal platform for hosting cloud-native applications that demand rapid scaling, such as real-time data streaming via Apache Kafka.

Originally developed by engineers at Google, Kubernetes draws upon the company's extensive experience with container technology. Google's internal platform, Borg, served as the precursor to Kubernetes, with lessons learned from Borg's development significantly influencing Kubernetes' design and functionality.

Notably, the Kubernetes logo's seven spokes pay homage to the project's original name, "Project Seven of Nine." In 2015, Google donated Kubernetes to the Cloud Native Computing Foundation (CNCF), further solidifying its status as a leading open-source project.

What can you do with Kubernetes?

With Kubernetes, users gain the ability to orchestrate containers across multiple hosts, optimizing hardware resources for running enterprise applications efficiently. The platform facilitates automated application deployments and updates, enabling developers to scale containerized applications dynamically and manage services declaratively, ensuring consistent application behavior.

Furthermore, Kubernetes empowers developers to create cloud-native applications using Kubernetes patterns, which are essential tools for building container-based applications and services.

Key features and capabilities of Kubernetes include:

Orchestration: Managing containers across hosts efficiently.

Resource Optimization: Maximizing hardware resources for running enterprise applications.

Deployment Automation: Controlling and automating application deployments and updates.

Storage Management: Adding storage to run stateful applications.

Scalability: Scaling containerized applications and their resources dynamically.

Declarative Management: Ensuring deployed applications run as intended.

Health Monitoring: Auto-placement, auto-restart, auto-replication, and autoscaling for app health.

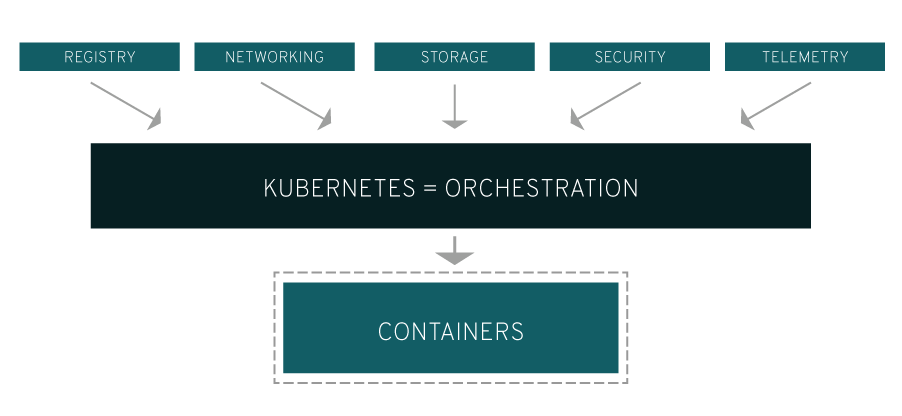

However, Kubernetes relies on complementary projects to provide fully orchestrated services. These include Docker Registry for registry functionality, OpenvSwitch and intelligent edge routing for networking, Kibana, Hawkular, and Elastic for telemetry, and LDAP, SELinux, RBAC, and OAUTH for security. Additionally, Ansible playbooks are utilized for automation, while a rich catalog of popular app patterns offers various services.

How does Kubernetes work?

Understanding Kubernetes jargon can sometimes feel like navigating a new language. Let's demystify some of the common terms to help you grasp Kubernetes concepts more easily:

Control plane: The nerve center of Kubernetes, comprising processes that manage nodes and coordinate tasks. It's where all task assignments originate.

Nodes: Worker machines in a Kubernetes cluster that execute tasks assigned by the control plane.

Pod: A logical unit in Kubernetes comprising one or more containers deployed together on a single node. Containers within a pod share networking, storage, and other resources, simplifying management and mobility across the cluster.

Replication controller: Governs the desired number of identical pod copies running within the cluster, ensuring scalability and fault tolerance.

Service: Abstracts work definitions from individual pods, facilitating seamless communication between services and pods. Kubernetes service proxies route requests to the appropriate pod, regardless of its location or status.

Kubelet: A vital component running on nodes, responsible for managing container execution. It reads container manifests and ensures the specified containers are correctly started and maintained.

kubectl: The command-line interface tool for Kubernetes, enabling users to interact with Kubernetes clusters, manage resources, and execute administrative tasks efficiently.

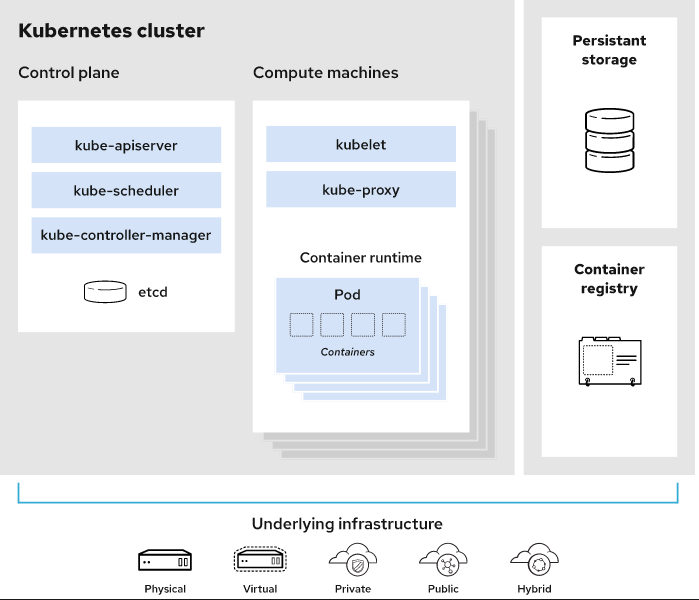

In Kubernetes, a functioning deployment is referred to as a cluster, comprising two primary components: the control plane and the compute machines, or nodes.

Each node operates within its own Linux® environment, whether physical or virtual, and is responsible for executing pods, which are collections of containers.

The control plane serves as the overseer of the cluster, maintaining its desired state by managing which applications are active and which container images they utilize. On the other hand, the compute machines are tasked with executing these applications and workloads.

Kubernetes operates atop an operating system, such as Red Hat® Enterprise Linux®, and interacts directly with the pods of containers running on the nodes.

Administrative commands are relayed to the compute machines by the Kubernetes control plane, which collaborates with various services to dynamically determine the most suitable node for a given task. Once identified, it allocates resources and assigns the appropriate pods to fulfill the requested workload.

The desired state of a Kubernetes cluster encompasses specifications regarding active applications, container images, resource availability, and other configuration details.

From an infrastructure perspective, managing containers doesn't undergo significant alterations. However, Kubernetes provides a higher level of control, eliminating the need for granular management of individual containers or nodes.

Your responsibilities involve configuring Kubernetes and defining nodes, pods, and their constituent containers, while Kubernetes handles the orchestration of these containers.

Kubernetes is highly flexible in terms of deployment options, supporting bare metal servers, virtual machines, public cloud providers, private clouds, and hybrid cloud environments. Its adaptability across diverse infrastructure types stands as one of its key advantages.

What is Docker?

Docker plays a crucial role in the Kubernetes ecosystem as a container runtime that Kubernetes orchestrates. When Kubernetes schedules a pod to a node, the kubelet on that node communicates with Docker to launch the specified containers. The kubelet then monitors the status of these containers through Docker, providing feedback to the control plane.

The difference between Kubernetes and Docker automates container management tasks, removing the need for manual intervention by administrators on each node. This streamlined process enhances efficiency and scalability within the cluster.

Why do you need Kubernetes?

The necessity of Kubernetes stems from its ability to facilitate the delivery and management of various types of applications, ranging from containerized and legacy apps to modern cloud-native architectures, including those undergoing transformation into microservices.

As businesses evolve to meet changing needs, development teams must rapidly build new applications and services. Cloud-native development, which starts with microservices encapsulated in containers, offers agility and efficiency, enabling faster development cycles and easier optimization of existing applications.

Kubernetes provides crucial orchestration and management capabilities necessary for deploying containerized workloads at scale. It enables the deployment of production applications, spanning multiple containers, across multiple server hosts.

With Kubernetes orchestration, organizations can construct application services spanning multiple containers, effectively scheduling, scaling, and managing container health over time. This not only enhances operational efficiency but also contributes to improved IT security measures.

Moreover, Kubernetes integration with networking, storage, security, telemetry, and other services ensures a comprehensive container infrastructure, essential for supporting complex production environments and multiple applications.

In production environments, the proliferation of containers necessitates multiple, colocated containers working in tandem to deliver individual services. Kubernetes addresses this challenge by grouping containers into "pods," adding a layer of abstraction that simplifies workload scheduling and facilitates the provision of essential services like networking and storage.

Furthermore, Kubernetes aids in load balancing across pods and ensures the appropriate number of containers are running to support varying workloads effectively.

By implementing Kubernetes alongside complementary open-source projects such as Open vSwitch, OAuth, and SELinux, organizations can orchestrate their container infrastructure comprehensively, leveraging the benefits of these additional tools to enhance functionality and security.

A tangible example of Kubernetes' transformative impact is demonstrated by Emirates NBD, a leading bank in the UAE. Faced with challenges related to slow provisioning and a complex IT environment, the bank leveraged Kubernetes to establish a scalable, resilient foundation for digital innovation. This initiative enabled Emirates NBD to streamline server provisioning, reduce application deployment times, and enhance collaboration between internal teams, ultimately driving digital transformation within the organization.

Support a DevOps approach with Kubernetes

To bolster a DevOps approach, Kubernetes offers invaluable support, facilitating the streamlined development and deployment of modern applications.

In the realm of modern application development, DevOps practices introduce a paradigm shift from traditional methodologies. DevOps accelerates the transition from idea conception to deployment by emphasizing automation of routine operational tasks and standardization of environments throughout an application's lifecycle.

Containers play a pivotal role in supporting DevOps principles by providing a unified environment for development, delivery, and automation. They enable seamless movement of applications across development, testing, and production environments, enhancing flexibility and consistency.

A significant outcome of implementing DevOps is the establishment of a continuous integration and continuous deployment (CI/CD) pipeline. CI/CD pipelines automate the process of delivering applications to customers at a rapid pace while ensuring software quality through frequent validation and testing, with minimal manual intervention.

When managing the lifecycle of containers with Kubernetes within a DevOps framework, software development and IT operations align seamlessly to support the CI/CD pipeline. Kubernetes orchestrates containerized workloads efficiently, ensuring consistency and reliability across various stages of the application lifecycle.

By leveraging the right platforms, both within and outside the container environment, organizations can maximize the benefits of cultural and process changes inherent in DevOps adoption. Kubernetes serves as a foundational pillar, enabling efficient collaboration between development and operations teams and fostering a culture of continuous improvement and innovation.

Using Kubernetes in production

Deploying Kubernetes in a production environment demands careful consideration and additional components beyond the core Kubernetes engine. While Kubernetes is open source and lacks a formalized support structure, relying solely on it for mission-critical operations can lead to frustration and dissatisfaction among both internal teams and customers.

An apt analogy is to envision Kubernetes as a car engine—it can function independently, but to operate effectively as part of a fully functional vehicle, it requires additional components such as transmission, axles, and wheels. Similarly, installing Kubernetes alone does not suffice for a production-grade platform.

To unlock the full potential of Kubernetes and address the complexities of production environments, various supplementary tools and technologies are indispensable. These include authentication, networking, security, monitoring, logs management, and more.

Comments