The Maturation of Data Science

- The Tech Platform

- Nov 30, 2020

- 6 min read

Data science used to be somewhat of a mystery, more of a dark art than a repeatable, scientific process. Companies basically entrusted powerful priests called data scientists to build magical algorithms that used data to make predictions, usually to boost profits or improve customer happiness. But in recent years, the field has matured to a remarkable degree, and that is enabling progress to be made on multiple fronts, from ModelOps and reproducibility to ethics and accountability.

About five years ago, the worldwide scientific community was suffering a “reproducibility crises” that impacted a wide range of scientific endeavors, including so-called hard sciences like physics and chemistry. One of the hallmarks of the scientific method is that experiments must be reproducible and will give the same results, but that lofty goal too often was not met.

Data science was not immune to this problem, which should not be surprising, given the relative newness and the probabilistic nature of the field. And when you mix in the black box nature of deep learning models and data that reflects a rapidly changing world, sometimes it seems a miracle that an algorithm of any complexity could generate the same result at two points in time.

While there are still challenges to overcome in the reproducibility department, data science, as a whole, appears to be marching forward toward a better, more professional, and more predictable future. The discipline is not as wholly reliant on highly paid data scientists to do all the work as it once was. Bottlenecks in the workflows are being attacked and broken up. Ethics are being more clearly delineated, algorithms are more accountable, and overall, it’s just becoming more of a team sport that abides by the same laws and corporate standards that other parts of the business must follow.

Closing the Data Science Gap

This maturation of data science is a good thing, says Michael Berthold, the CEO of KNIME, which held its virtual Fall Summit 2020 last week.

“Quite a lot of people are becoming a lot more professional about their data science,” Berthold said during a session at the event. “It’s less the group in the corner doing cool stuff. Sure, they created amazing results. But every time you want something, you had to go back to them. It’s become a lot more mature.”

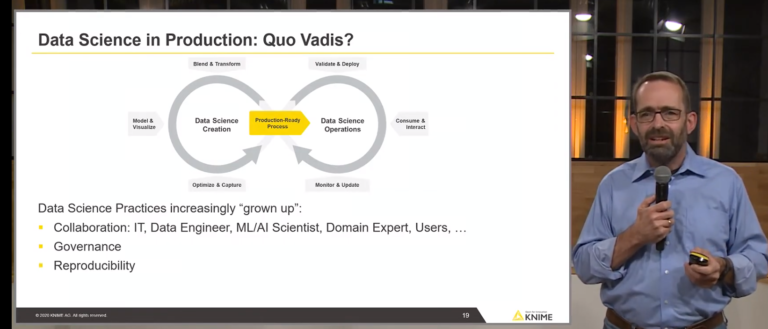

KNIME CEO Mike Berthold wants to unite data science development and operations

In particular, companies are getting better at operationalizing their data science projects, Berthold said. A key part of that improvement can be linked to the recognition that the tools and techniques needed to develop good data science applications aren’t necessarily the same tools and techniques that are needed for putting those models into production.

In fact, the demands from these two disciplines are so divergent that they require separate workflows and separate cycles, and different people are involved. Data scientists may be in charge of understanding the data and the business challenge at issue, and building the models to predict outcomes within those constraints. But more often than not, another group of folks are involved with putting those models into production. The advent of a new ModelOps discipline (sometimes called MLOps) is beginning to resemble the DevOps mandates of continuous integration and continuous improvement (CI/CD) that the rest of the IT world has adopted.

However, as the data science team diverges and development and production groups become more specialized, it becomes increasingly difficult to keep the two sides in synch. “Every time your data scientist changes something on this creation side, starts playing with new models, you need to also remember to update that production workflow, otherwise you’re out of synch,” Berthold said. “Big problems.”

Some data scientists would attempt to minimize the amount of code they had to juggle through clever if/then statements. “The best solution I’ve ever seen anywhere is somebody doing that in R, where they literally always wrote programs where the first line started with ‘if in production’ versus ‘if in creation,’” the KNIME CEO said. “They were trying to re-use as many code paths as possible.”

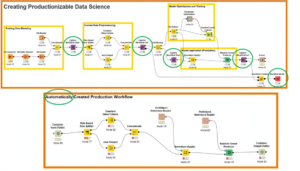

KNIME wants to unit development and operations aspects of data science

KNIME recognized that something needed to exist at the juncture of these two worlds to help bring them back together, to an extent. To that end, the Swiss company announced unveiled new capabilities in its software whereby changes to the model made in the KNIME Analytics Platform can automatically be propagated into the KNIME Server to put it into production. In Berthold’s workflow diagram, the new purple nodes are linking development and production worlds together.

“Data science practices, in our mind and what we see, are increasingly growing up, and that means we need better ways to collaborate between the IT, the data engineer, your ML/AI scientists, the domain experts, and the users,” Berthold said. “They all need to work together. So having different tools that you need to [integrate] is a nightmare. Having that all as one part, one nice workflow, is of course a huge benefit.”

Several benefits flow of this flows, including better governance. “Being able to know it’s not relying on some strange library or some other tool, that it’s a workflow that does it all. That allows us of course much better governance on the IT side,” he said.

It also brings better reproducibility, which is “a gigantic aspect,” Berthold said. “Being able to run these workflows, run the production system three years later and still get the same results, has increasingly become important,” he said.

AI Ethics Improving

The increasing maturation of the data science field is also paying dividends when it comes to ethics and trustworthy, which are emerging as big challenges for AI to overcome.

It wasn’t long ago that companies didn’t give a thought to how AI could go off the rails, said Ted Kwartler, DataRobot’s vice president of Trusted AI.

Companies are less tolerant of black boxes (ART-STOCK-CREATIVE/Shutterstock)

“Oftentimes companies initially are so excited to just fix a problem with AI or sprinkle AI on a problem, where the president of the company says ‘We need AI,’” Kwartler said. “People pop champagne to get their first model into production. They don’t think about governance. They don’t think about impact assessment. They don’t think about how things can spiral out of control.”

That mindset is changing, and now companies are imminently aware of the damage that a poorly developed data science application or AI system can do to their brand and their business, according to Kwartler, who leads a 40-person team at DataRobot dedicated to trust and explainability in AI.

“The market has woken up to it, and people now are really coming to us,” Kwartler told Datanami in a recent interview. “Our customer-facing folks are saying, hey we need a briefing on this. I think they see it as brand and reputational risk at first, and secondarily, now that so much of the historical patterns have changed given everything that’s happening, they’re thinking about how do I trust my model now that maybe these long held patterns in my old training data don’t hold true.”

As the fear of incorporating racial or gender bias into production AI systems has seeped into the corporate hierarchy, executives are stepping up to prevent that from occurring. That often takes the form of MLOps monitoring, Kwartler said. However, this approach is not yet widespread.

AI bias is increasingly on the radar of business executives (FGC/Shutterstock)

“I would say it’s early days,” he said. “We have a pretty robust system, where we put guardrails in place, including Humble AI and drift detection and prediction explanation. When a prediction happens, you see what’s driving that prediction up and down, what are the three main causes for it. Those are those are types of things that robust ML Ops system needs in terms of monitoring the lifecycle.”

While data scientists can focus on developing the best machine learning models using DataRobot’s tool, it’s often a group of technical and non-technical folks who are using the MLOps system to ensuring fairness and accountability.

“The reason I focus on MLOps is that’s where the rubber meets the road,” Kwartler said. “To me it’s super important because that’s the last final check before its impact is felt.”

As the field of data science matures, we’re finding out how not just how it helps us, but also how it can hurt us. That companies like DataRobot are experiencing a demand for tooling to boost accountability and ethics is a sign that companies are figuring this out, and working through it, which is a good thing for all of us.

Source: Paper.li

Comments