Neural Network : 5 Essential Neural Network Algorithm

- The Tech Platform

- Oct 5, 2021

- 3 min read

An artificial neural network learning algorithm, or neural network, or just neural net is a computational learning system that uses a network of functions to understand and translate a data input of one form into a desired output, usually in another form. The concept of the artificial neural network was inspired by human biology and the way neurons of the human brain function together to understand inputs from human senses.

Neural networks are just one of many tools and approaches used in machine learning algorithms. The neural network itself may be used as a piece in many different machine learning algorithms to process complex data inputs into a space that computers can understand.

Neural networks are being applied to many real-life problems today, including speech and image recognition, spam email filtering, finance, and medical diagnosis, to name a few.

1. The feedforward algorithm…

Where n is a neuron on layer l, and w is the weight value on layer l, and i is the value on l-1 layer. All input values are set as the first layer of neurons. Then, each neuron on the following layers takes the sum of all the neurons on the previous layer multiplied by the weights that connect them to the relevant neuron on that following layer. This summed value is then activated.

2. A common activation algorithm: Sigmoid…

No matter how high or low the input value, it will get normalized to a proportional value between 0 and 1. It’s considered a way of converting a value to a probability, which then reflects a neuron’s weight or confidence. This introduces nonlinearity to a model, allowing it to pick up on observations with greater insight.

3. The cost function…

The squared cost function lets you find the error by calculating the difference between the output values and target values. The target/desired values could be a binary vector for classification.

4. The back propagation…

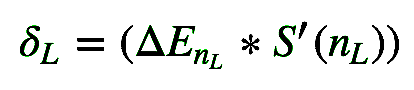

The error from the cost function is then passed back by being multiplied by the derivative of the sigmoid function S’. Thus, δ is first defined as the following:

at the output layer (beginning of back propagation). Then we calculate the error through each layer which can be considered to represent the recursive accumulation of change so far that contributed to the error (from the perspective of each unique neuron). Past weight values must be transposed to fit the following layer of neurons.

Finally, this change can be traced back to an individual weight by multiplying it by the weight’s activated input neuron value.

5. Applying the learning rate/weight updating…

The change now needs to be used to adapt the weight value. The eta represents the learning rate:

These are the main five algorithms needed to get a neural network running.

These algorithms and their functions only scratch the surface of how powerful neural networks can be and how they can potentially impact various aspects of business and society alike. It is always important to overview how an exciting technology is designed, and these five algorithms should be the perfect introduction.

Applications of Neural Network:

Neural networks can be applied to a broad range of problems and can assess many different types of input, including images, videos, files, databases, and more.

They also do not require explicit programming to interpret the content of those inputs.

Because of the generalized approach to problem solving that neural networks offer, there is virtually no limit to the areas that this technique can be applied.

Some common applications of neural networks today, include image/pattern recognition, self driving vehicle trajectory prediction, facial recognition, data mining, email spam filtering, medical diagnosis, and cancer research. There are many more ways that neural nets are used today, and adoption is increasing rapidly.

The Tech Platform

Comments