Google Translate was launched 10 years ago. During the initial days, Google Translate was launched with Phrase-Based Machine Translation as the key algorithm. Later, Google came up with other machine learning advancements that changed the way we look at foreign languages forever. In the next section, we look at the machine learning methods used by Google for its translation services.

Google Neural Machine Translation

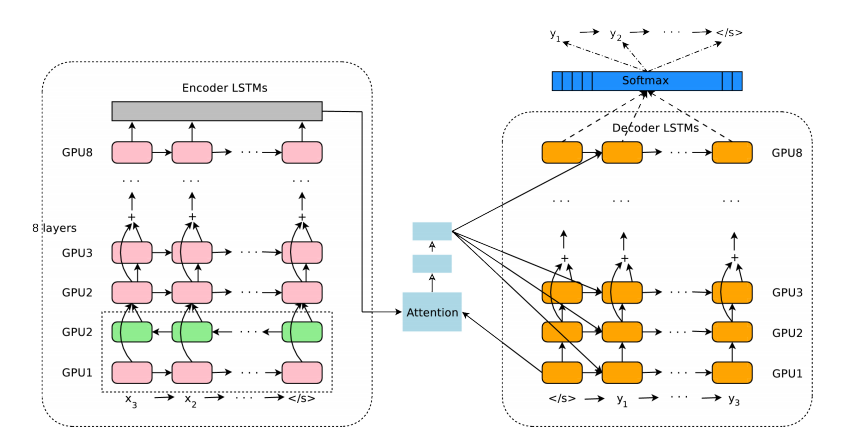

The main improvement in the translation systems was achieved with the introduction of Google Neural Machine Translation or GNMT. Its model architecture consists of an encoder network (on the left) as shown above and a decoder network on the right. In between these two, sits an attention module. A typical setup has 8 encoder LSTM layers and 8 decoder layers. Using a human side-by-side evaluation on a set of isolated simple sentences, GNMT showed a reduction in translation errors by an average of 60% compared to Google’s phrase-based production system.

Zero-Shot Translation With NMT

While GNMT provided significant improvements in translation quality, scaling up to new supported languages presented a significant challenge. The question here was, can they translate between a language pair which the system has never seen before? When the modified GNMT was tasked with translations between Korean and Japanese, where Korean⇄Japanese examples were not shown to the system. Impressively, the model generated reasonable Korean⇄Japanese translations, even though it has never been taught to do so. This was called “zero-shot” translation. Introduced in 2016, this is one of the first successful demonstrations of transfer learning for Machine Translation.

Transformer: The Turning Point

The introduction of Transformer architecture revolutionized the way we deal with language. In the seminal paper, “Attention is all you need”, Google researchers introduced Transformer, which later led to other successful models such as BERT. In case of translation, for example, if in a sentence, say “I arrived at the bank after crossing the river,” if the word “bank” is to be identified as something that refers to the shore of a river and not a financial institution, the Transformer immediately learnt it and made the decision in a single step. Another intriguing aspect of the Transformer is that the developers can even visualise what other parts of a sentence the network attends to when translating a given word, thus gaining insights into how information travels through the network.

Translating Foreign Menu With A CNN

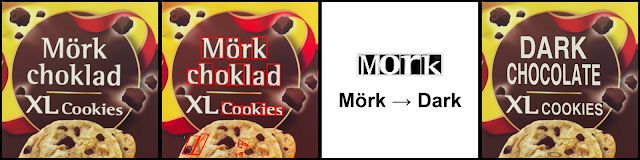

5 years ago, Google announced that Google Translate app can do a real-time visual translation of multiple languages. For example, when an image is fed to a Google Translate app, it first finds the letters in the picture. Then these words are isolated from the background objects like trees or cars. The model looks at blobs of pixels that have a similar colour to each other that are also near other similar blobs of pixels. Then the app recognises what each letter actually is with the help of a convolutional neural network. In the next step, the recognised letters are checked in a dictionary to get translations. Once found, the translation is rendered on top of the original words in the same style as the original.

Speech Translation With Translatotron

In traditional cascade systems, to translate speech, an intermediate representation is required. With Translatotron, Google demonstrated that a single sequence-to-sequence model can directly translate speech from one language into speech in another language, without the need for intermediate text representation, unlike cascaded systems. Translatotron is claimed to be the first end-to-end model that could directly translate speech from one language into speech in another language and was also able to retain the source speaker’s voice in the translated speech.

For Reducing Gender Bias In Translate

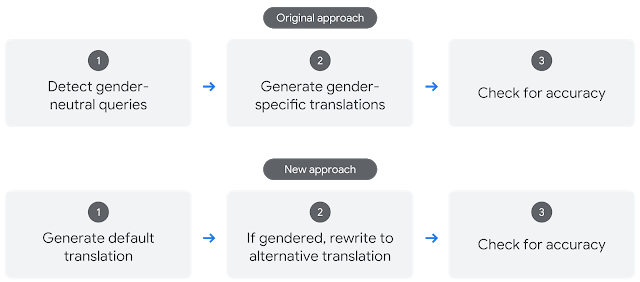

Using a neural machine translation (NMT) system to show gender-specific translations is a challenge. So last month, Google announced an improved approach — Rewriter — that uses rewriting or post-editing to address gender bias. After generating the initial translation, it is then reviewed to identify instances where a gender-neutral source phrase yielded a gender-specific translation. In that case, a sentence-level rewriter is applied to generate an alternative gendered translation. In the next step, both the initial and the rewritten translations are reviewed to ensure that the only difference is the gender. For data generation process, a one-layer transformer-based sequence-to-sequence model was trained.

Apart from the above mentioned models, many other techniques, such as back-translation played a crucial role in the way we use translation apps.

Source: Paper.li