Installing Apache Spark in Alibaba Cloud ECS

- Gavaskar S

- Sep 4, 2020

- 1 min read

Updated: Mar 14, 2023

Apache Spark is lightning fast Cluster computing technology, which consists of a unified analytics engine for large-scale data processing, particularly used for big data and machine learning applications. It can be used to process streaming queries and batch processing.

Figure1:Apache Spark Components

Features of Apache Spark are

It runs the workload faster with good performance for batch and stream processing.

Using spark we can write application quickly using scala, JAVA, Python, R and SQL languages.

It consists of libraries/components such as SQL and DataFrames, MLlib for machine learning, GraphX, and Spark Streaming.

Spark runs on Hadoop, Apache Mesos, Kubernetes, standalone, or in the cloud.

Steps to Install Apache Spark in Alibaba Cloud ECS

Step-1:Prerequisites: Install the update using the following command

sudo apt update

sudo apt -y upgradeStep-2: Install the latest version of JAVA package by using the following command

sudo apt install default-jdkStep-3: Install Scala Language feature by using the below command

sudo apt-get install scalaStep-4: Checking whether Scala is installed properly by using the command and run a simple program in scala.

root@ECS-Review:/opt#ScalaThe output displayed will be

Welcome to Scala 2.11.12 (OpenJDK 64-Bit Server VM, Java 11.0.7).Type in expressions for evaluation. Or try :help.

scala> println("Hello World")

Hello WorldStep5: Install Apache Spark by using the below command

root@ECS-Review:/opt#wget https://downloads.apache.org/spark/spark-3.0.0/spark-3.0.0-bin-hadoop2.7.tgz

root@ECS-Review:/opt#tar -xzvf spark-3.0.0-bin-hadoop2.7.tgzStep-6: update ./bashrc file by using the below code

root@ECS-Review:/opt/spark-3.0.0-bin-hadoop2.7/bin# vi ~/.bashrcStep-7: Set the SPARK_HOME path by adding the below line in ./.bashrc file

export SPARK_HOME=/opt/spark-3.0.0-bin-hadoop2.7

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbinstep-8:Run the below command to update .bashrc file

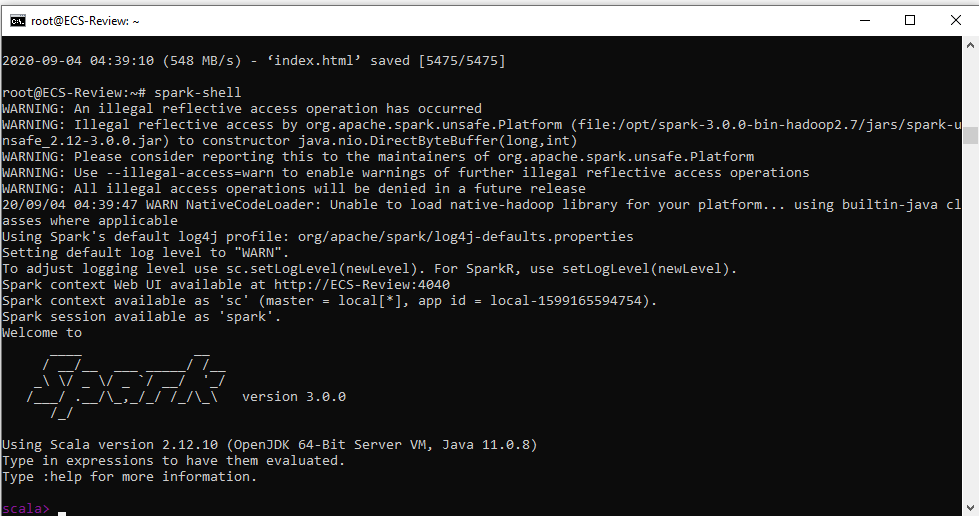

root@ECS-Review:/opt/spark-3.0.0-bin-hadoop2.7/bin# source ~/.bashrcStep-9:Finally run Apache Spark by using the below command and the Spark command prompt will be displayed as shown in figure below

root@ECS-Review:/opt/spark-3.0.0-bin-hadoop2.7/bin# start-master.sh

Comments