Azure AI Studio: How to find the perfect model for your task?

- The Tech Platform

- Mar 11, 2024

- 5 min read

Choosing the right AI model for your project can feel like searching for a needle in a haystack. There are countless options available, each with its strengths and weaknesses. But what if there was a way to streamline the selection process and identify the ideal model for your specific task?

Enter Azure AI Studio's innovative Model Benchmarks feature. This powerful tool empowers you to move beyond guesswork and make data-driven decisions when selecting an AI model. In this article, we'll learn about Model Benchmark, exploring how it simplifies the model selection process and ensures your AI projects achieve optimal performance.

Model Benchmark in Azure AI Studio

Model benchmarks in Azure AI Studio provide a valuable tool for users to review and compare the performance of various AI models. This feature is designed to cater to the diverse needs of developers, data scientists, and machine learning experts.

Azure AI Studio offers a comprehensive suite of tools and services, making the journey from concept to evaluation to deployment seamless and efficient. The GenAI applications built in the Azure AI Studio use Large Language Models (LLMs) to generate predictions based on the input prompts. The quality of the generated text depends on, among many other things, the performance of the LLM.

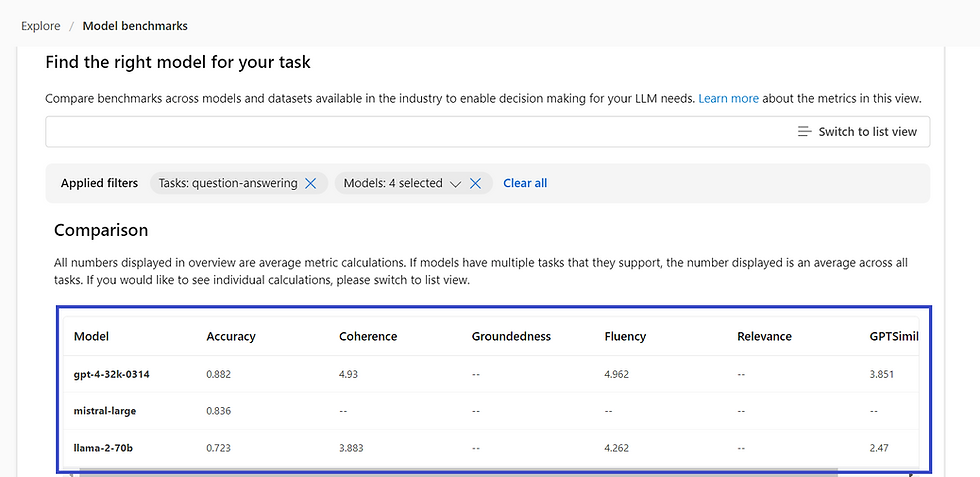

The platform provides LLM quality metrics for OpenAI models and Llama 2 models such as Llama-2-7b, gpt-4, gpt-4-32k, and gpt-35-turbo. These metrics help simplify the model selection process and enable users to make more confident choices when selecting a model for their task.

Model benchmarks allow users to compare these models based on accuracy. This comparison empowers users to make informed and data-driven decisions when selecting the right model for their specific task, ensuring that their AI solutions are optimized for the best performance.

Previously, evaluating model quality could require significant time and resources. With the prebuilt metrics in model benchmarks, users can quickly identify the most suitable model for their project, reducing development time and minimizing infrastructure costs.

In Azure AI Studio, users can access benchmark comparisons within the same environment where they build, train, and deploy their AI solutions. This enhances workflow efficiency and collaboration among team members.

Azure AI Studio’s model benchmarks provide an invaluable resource for developers and machine learning experts to select the right AI model for their projects. With the ability to compare industry-leading models like Llama-2-7b, gpt-4, gpt-4-32k, and gpt-35-turbo, users can harness the full potential of AI in their solutions.

Model benchmarks empower users to make informed decisions, optimize performance, and enhance their overall AI development experience.

How to use Model Benchmark in Azure AI Studio?

Let's walk through the steps involved in using Azure AI Studio's Model Benchmarks to find the perfect AI model for your specific task.

STEP 1: Navigate to the Explore Page

Open Azure AI Studio and navigate to the Explore section. From there, select Model Benchmark.

STEP 2: Select the Models and Task

Azure AI Studio currently supports two types of tasks:

Question Answering

Text Generation

The selection of models depends on your specific needs. You can choose from a variety of models available in Azure AI Studio.

STEP 3: Compare

After selecting the task and model, click on the Compare option. This will initiate the comparison process.

STEP 4: Review the Model Benchmark

This step will provide a list of the best-performing models for the selected task.

The results are also presented as charts for a more visual comparison. This allows you to make an informed decision about which model best suits your needs.

Filters:

Azure AI Studio allows you to set filters to refine your model selection process. This can help you choose a model that is more accurately suited to your specific task. The filters include:

Tasks

This filter allows you to select the specific task that you want the model to perform. There are two task options available (Until now)

Question Answering: The model uses its understanding of the question and its internal knowledge of the provided context to generate the most accurate and relevant answer.

Text Generation: The generated text could be a continuation of the prompt or a creative piece of content like a story, poem, or essay.

In Azure AI Studio’s model benchmark, you can select either one or both of these tasks for comparison. If you select both, the benchmark will provide performance metrics for each model on both tasks. This can be useful if you’re developing a solution that requires both Question Answering and Text Generation capabilities.

Metrics

In Azure AI Studio, you have the flexibility to choose the performance metric that aligns with your project’s requirements. This selection is crucial as it directly impacts the effectiveness of your model.

Here are the metrics you can choose from while selecting your ideal model in Azure AI Studio:

Accuracy: Measures how often the model’s responses are correct. It’s a fundamental metric for many machine learning tasks.

Coherence: Evaluate whether the model’s responses make sense and are logically consistent.

Fluency: Assesses the readability of the model’s responses. A fluent response is grammatically correct and flows naturally.

Groundedness: Evaluate whether the model’s responses are based on factual information. A grounded response is factually accurate and makes realistic assertions.

Relevance: Measures how closely the model’s responses align with the context of the conversation or the prompt.

GPTSimilarity: This is a specific metric for GPT models. It measures how similar the model’s responses are to those generated by a GPT model.

Models

In Azure AI Studio, the “Models” filter allows you to select specific models that you want to compare. This is particularly useful when you want to understand the performance differences between two or more models for a specific task.

For instance, if you previously selected two models for comparison, you can use this filter to revisit that comparison. You can select these models from the available models in Azure AI Studio, including models like GPT-3.5, GPT-4, and the Davinci model.

In Azure AI Studio, you can select specific datasets for benchmarking models. This allows you to assess how well the models perform on these datasets, which can be crucial for your specific use case.

Here is the list of datasets available in Azure AI Studio:

boolq: A dataset for yes/no questions.

hsm8k: A dataset that includes 8,000 human-written stories.

hellaswag: A dataset designed to test models on common sense reasoning.

human_eval: A dataset used for evaluating the performance of AI models.

mmlu_humanities, mmlu_other, mmlu_social_sciences, mmlu_stem: These are datasets from the Multi-Modal Language Understanding (MMLU) challenge, each focusing on a different domain: humanities, other topics, social sciences, and STEM (Science, Technology, Engineering, Mathematics), respectively.

opennookqa: A dataset for open-domain question answering.

piga: A dataset that focuses on physical reasoning.

social_iqa: A dataset for social intelligence question answering.

truthfulqa_generation, truthfulqa_mc1: These are datasets from the TruthfulQA challenge, used for generating truthful answers and multiple-choice questions, respectively.

winogrande: A dataset designed to test models on common sense reasoning, similar to hellaswag.

By selecting the appropriate datasets, you can ensure that the models are benchmarked in a way that aligns with your project’s requirements

Conclusion

With Azure AI Studio's Model Benchmarks, choosing the right AI model for your project is no longer a guessing game. This cool feature helps you pick the best model based on facts, not luck. There's no single "perfect" model for everyone, but Model Benchmarks makes it easy to find the one that works best for your specific task. So ditch the endless searching and get started on your project with confidence.

Comments