Analyze Office 365 SharePoint online Data using Azure Data Lake Storage and Analytics Service – 1

- Nakkeeran Natarajan

- Mar 20, 2019

- 2 min read

In this article, we will understand how Microsoft flow can be configured to push the data from Office 365 SharePoint list into Azure Data Lake Storage service. We could also understand, how the data can be analyzed using the Azure Data Lake Analytics service.

In the previous article, you could understand the benefits of using Azure Data Lake Storage & Analytic services. Also, it helps configuring these two Azure services.

Setting up Microsoft Flow

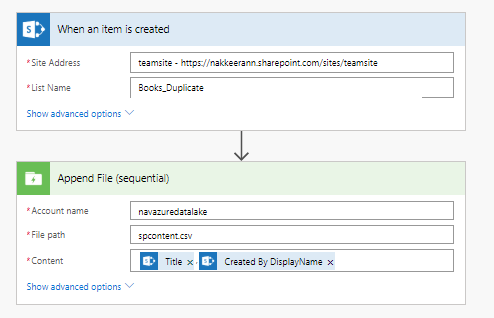

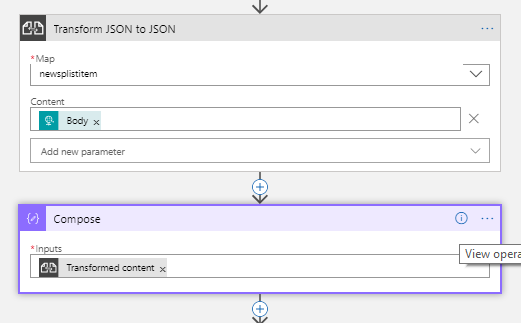

Login to the Microsoft Flow Portal. Go to my flows, and select create flow from blank option. The following snapshot shows the flow being configured.

MS Flow steps to push Data From SharePoint to Azure Data Lake Storage

Once configured, test the trigger.

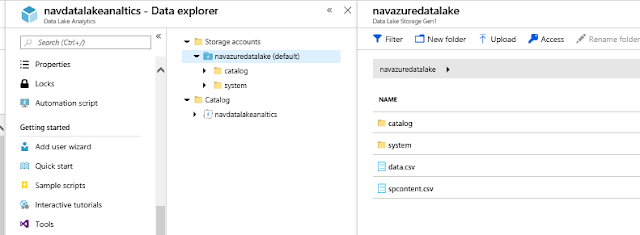

Navigate to the data lake analytics service or data lake storage service -> select data explorer -> Upload the file with name spcontent.csv (The same name has been configured on Microsoft Flow as file path parameter).

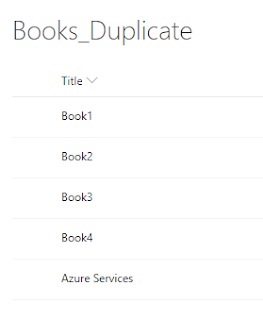

Go the SharePoint list, and create a new entry.

Creating SharePoint Entries for pushing data into Azure Data Lake Storage

Then, once Microsoft Flow successfully completes the run, check the newly created file on Azure Data lake storage. You could find the newly created entries available on the data lake storage. The following picture depicts the same.

Azure Data Lake Storage Explorer View

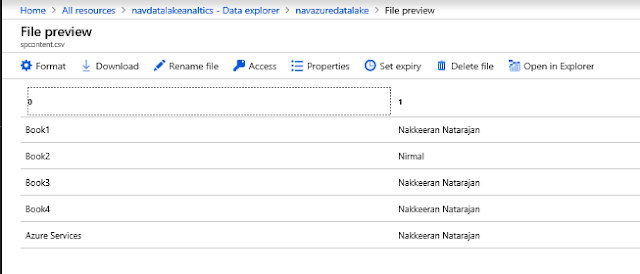

The following snapshot shows the table view of .csv file created.

Azure Data Lake Storage File preview - contains SharePoint Data

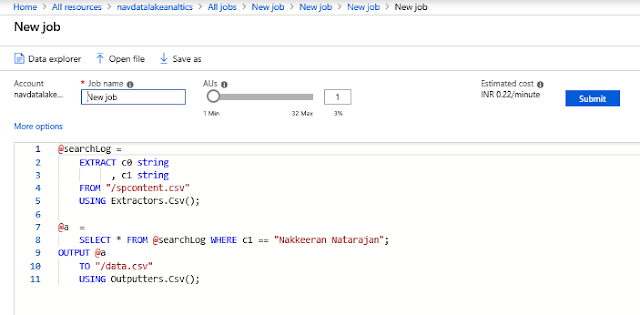

From the data lake analytics service, select overview. Then create a new job by selecting “New Job” from the overview pane. Create a necessary U-SQL script for processing the file content available on the azure data lake storage.

@searchLog =

EXTRACT c0 string

, c1 string

FROM "/spcontent.csv"

USING Extractors.Csv();

@a = SELECT * FROM @searchLog WHERE c1 == "Nakkeeran Natarajan";

OUTPUT @a

TO "/data.csv"

USING Outputters.Csv();

In our scenario, let us extract the items created by one specific person and push it to another file. The following snapshot shows the new job created with U-SQL script for extracting the entries with specific name and pushing it to another file.

U-SQL script for analyzing the Data Lake Storage File Extracted From SharePoint

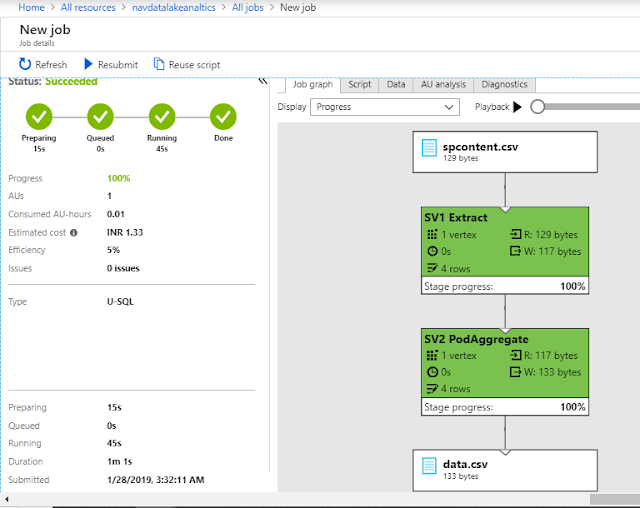

Open the new output file created by the script. The extracted content will be available. The following snapshot shows the graph view of the flow.

Azure Data Lake Analytics Job Graph - Shows the Flow

The following snapshot shows the output file.

Azure Data Lake Storage File - Contains Data Analyzed using Data Lake Analytics

Thus we have seen how to push the data from Office 365 SharePoint into Azure Data Lake Storage service using Microsoft Flow. And we have also written a script on Azure Data Lake Analytics service to process the files generated by the Microsoft Flow. These data is now available for big data analytic tools.

Comments